- Yaro on AI and Tech Trends

- Posts

- 📊These Charts Show the State of AI in 2025.

📊These Charts Show the State of AI in 2025.

Get in Front of 50k Tech Leaders: Grow With Us

Midweek check-in, fam

Here’s what we’ve got today… We’re diving into charts that reveal just how fast AI is advancing—and how plummeting costs are making the tech more accessible than ever.

But while clean energy just hit a global record, why is the U.S. turning back to coal?

And as AI grows more powerful, where do we draw the red lines on safety and ethics?

Let’s get into it.

These Charts Show the State of AI in 2025.

Trump pushes coal to power AI boom.

🧰 AI Tools

AI Red Lines: Can AI be safe by design?

📰 News and Trends.

These charts show the State of AI in 2025.

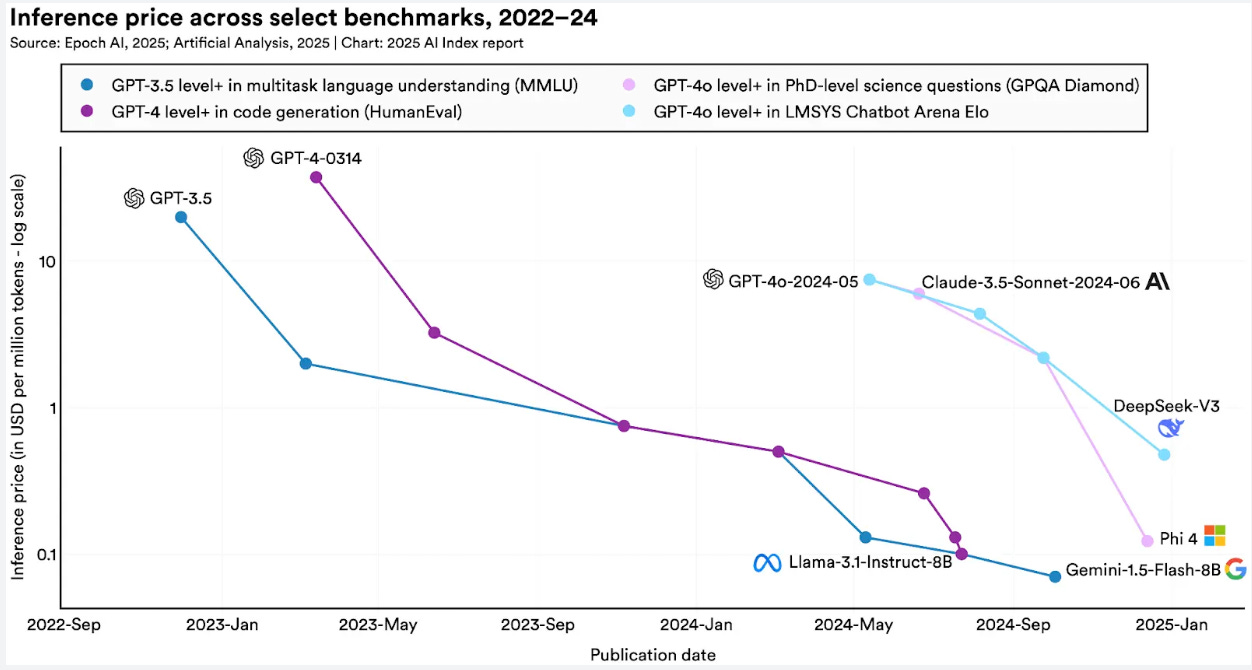

Stanford’s 2025 AI Index is out and reveals a maturing, more efficient AI ecosystem—with major shifts in costs, capabilities, regulation, and risks:

Smaller but Smarter: Microsoft’s Phi-3-mini (3.8B params) hit GPT-3.5-level benchmarks, down from PaLM’s 540B in 2022—a 142x size reduction.

Massive Cost Drop: In just 2 years Inference costs dropped 280x, from $20 to $0.07 per million tokens between 2022–2024. At this rate we could see million-token inference costing less than a penny by 2026 (potentially under $0.00025

China Narrows the Gap: The U.S. still leads with 40 major models in 2024 vs. China’s 15, but China’s model quality nearly matched U.S. performance on key benchmarks. Deepseek, ManusAI, and other Chinese models took a big leap earlier this year.

AI Incidents Surge: Reported harms hit 233 cases in 2024, up 56.4% YoY, including deepfakes and chatbot-linked tragedies.

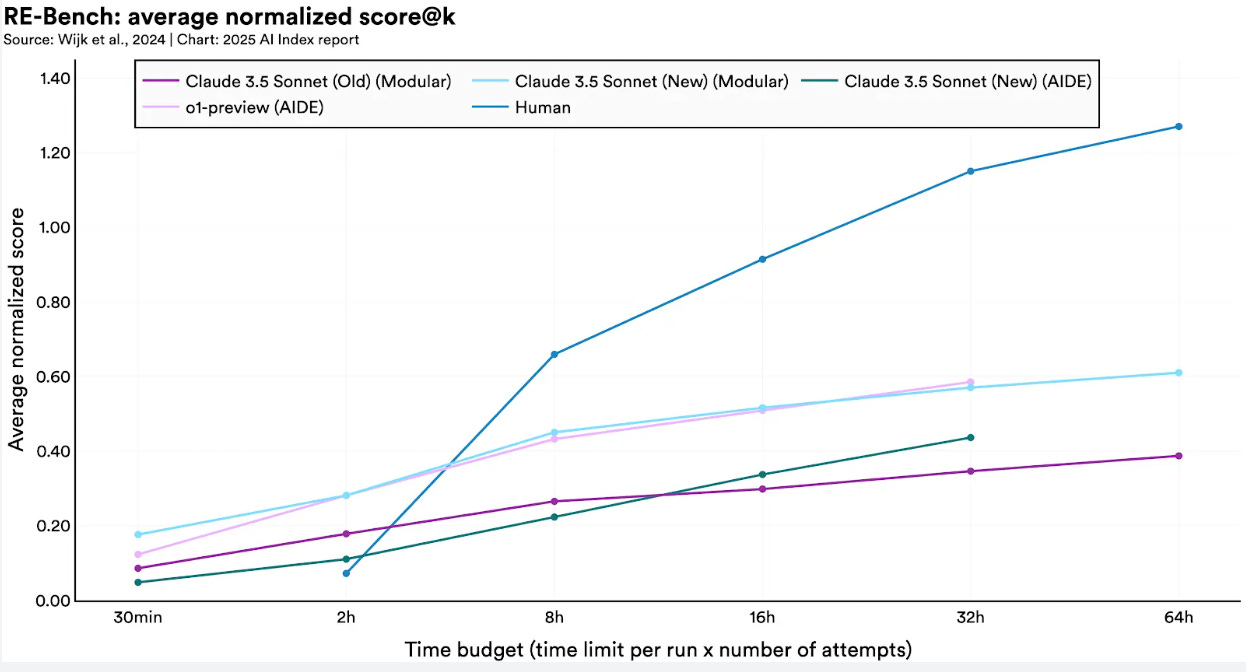

AI Agents Improve: In short tasks, AI agents scored 4x better than humans, but humans still win on long, complex tasks (2-to-1 edge)

Investment Booms: U.S. AI private investment reached $109B in 2024, 12x China and 24x UK. Generative AI saw a $25.5B lead over EU+UK.

Business Adoption Jumps: AI use in companies hit 78% in 2024 (up from 55%), and 71% used genAI in at least one function (up from 33%).

AI Medical Devices Soar: FDA-approved AI devices grew from 6 in 2015 to 223 by 2023.

States Lead Regulation: AI-related state laws in the U.S. jumped from 49 in 2023 to 131 in 2024, while federal laws lag behind.

Asia More Optimistic: The belief that AI brings more benefits than risks is 83% in China, but just 39% in the U.S.

Bottom line: AI is cheaper, faster, and more integrated—but also raising concerns as adoption and incidents rise.

Get venture-funded immersive tech into your portfolio.

SoundSelf blends sound, light, and biofeedback to create immersive wellness experiences like nothing else. Clinical studies show a 52% increase in wellness, a 34% decrease in depression, and a 49% drop in anxiety. Users report feeling instant clarity and emotional breakthroughs, often within minutes. This is clinically-validated technology that’s redefining the future of wellness, and now, you can be part of it. Own a piece of SoundSelf for as little as $100.

Read the Offering information carefully before investing. It contains details of the issuer’s business, risks, charges, expenses, and other information, which should be considered before investing. Obtain a Form C and Offering Memorandum at https://wefunder.com/soundself

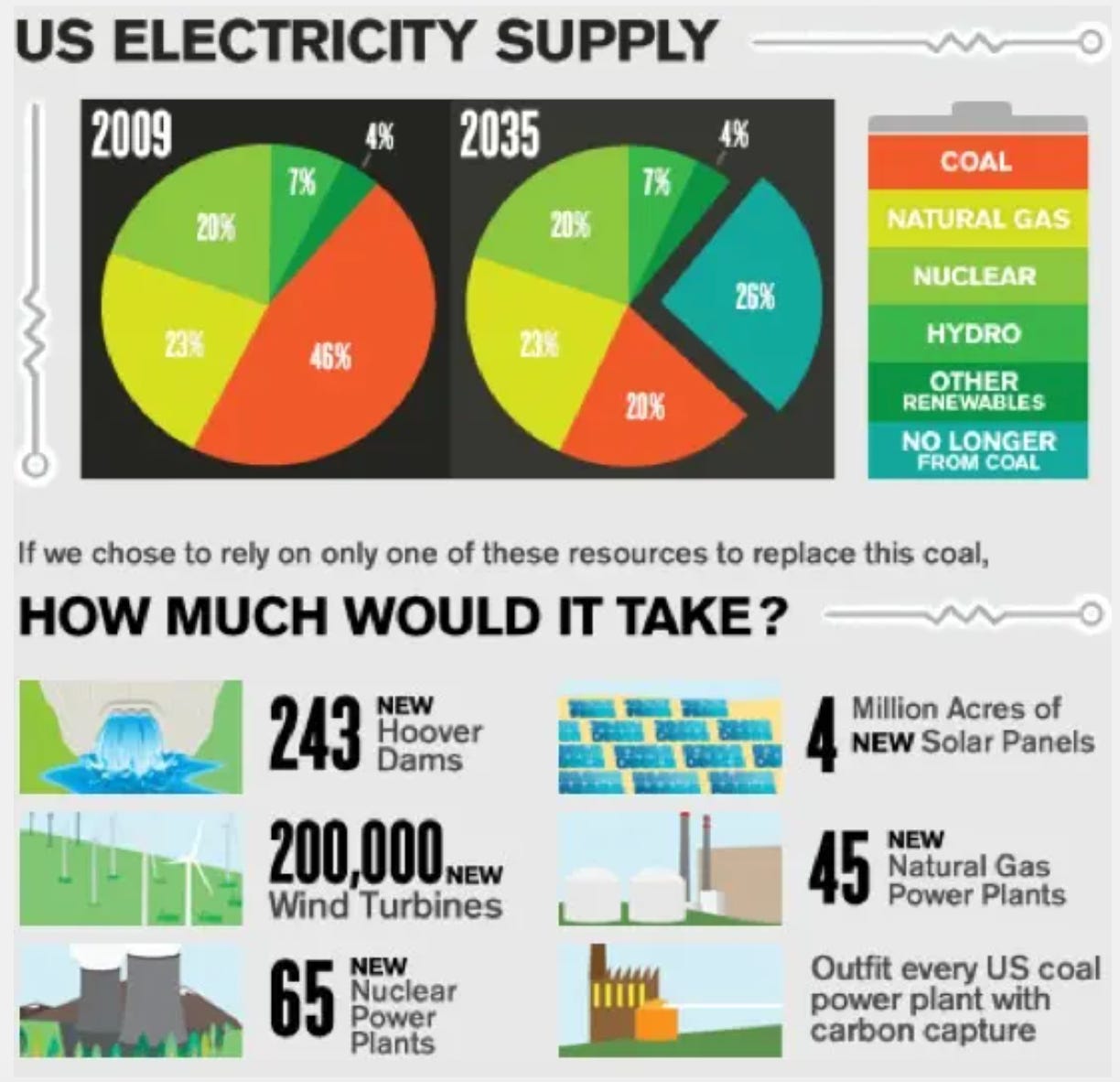

Trump pushes coal to power AI boom.

Trump signed executive orders reviving the dirty and inefficient coal industry, citing rising electricity demand from AI. The orders invoke the Defense Production Act and give the Energy Dept. emergency powers to keep coal plants open, fast-track leases on federal lands, and cut environmental regulations.

Key context:

U.S. coal use is down 50%+ since 2008, with no significant new coal plants planned, but hey let’s revive it and End Biden’s agreement on a greener future.

Energy Secretary Chris Wright argues AI will need huge electricity increases over the next 5–10 years, which is very true, but what about nuclear reactors, and newer variations of this technology for SMR which can bring innovation and cleaner energy for the future to come.

Environmental groups call the move regressive and inefficient, warning it will raise costs and pollution.

Bottom line: The new administration is banking on coal to meet AI-era power needs—despite declining demand, economic hurdles, and environmental backlash.

📰 AI News and Trends

Character.AI Introduces Parental insights to enhanced safety for teens in the wake of user suicides.

DOGE using AI to snoop on U.S. federal workers to monitor anti-trump sentiment.

How University Students Use Claude.

Microsoft’s Copilot can now browse the web and perform actions for you.

Waymo Explores Using Rider Data to Train Generative AI.

🌐 Other Tech news

Kawasaki Reveals Horse-Like Mobility Vehicle: The CORLEO

A Japanese railway shelter was replaced in less than 6 hours by a 3D-printed model.

DOGE Moves From Secure, Reliable Tape Archives to Hackable Digital Records.

Vibe coding is a whole Vibe. The newest trend in programming is to code without writing code.

AI Red Lines: Can AI be safe by design?

As artificial intelligence systems rapidly evolve in capability and autonomy, the global conversation around their governance is shifting. Increasingly, regulators and developers alike are recognizing the need to move beyond reactive safety measures and toward safety by design—ensuring AI systems are built from the ground up to be safe, aligned, and trustworthy.

One promising concept gaining traction is the use of AI red lines—clear boundaries that define behaviors and uses AI must never cross. These include, for example, autonomous self-replication, breaking into computer systems, impersonating humans, enabling weapons of mass destruction, or conducting unauthorized surveillance. These red lines act as non-negotiable limits that protect against severe harms, whether caused by misuse or by AI systems acting independently.

Crucially, red lines aren't just policy ideals—they are design imperatives. To be effective, they must be embedded into how AI is developed, tested, and deployed. This includes building in technical safeguards, conducting rigorous safety testing, and establishing oversight mechanisms to catch violations before they cause real-world damage.

Three key qualities that make red lines meaningful.

Clarity (the behavior must be precisely defined), universal unacceptability (the action must be widely viewed as harmful), and consistency (they must hold across contexts and jurisdictions). When done right, they help foster a common framework across borders, enabling responsible innovation while preventing a race to the bottom in AI safety.

In high-stakes domains—such as healthcare, defense, or finance—these boundaries matter more than ever. As we enter an era where AI systems influence real-world decisions and outcomes, red lines offer a way to protect society while still unlocking AI’s potential. In doing so, they help shift the focus from fixing harm after the fact to building technology that won’t cause it in the first place.

But how viable is this model in practice? Can we realistically define universal red lines in a world where ethical norms, legal frameworks, and technological capabilities vary widely across countries and contexts? Who gets to decide what’s “unacceptable,” and how do we ensure that enforcement mechanisms are both effective and fair—without stifling innovation? These are open questions that challenge the simplicity of the red line concept and highlight the need for inclusive, globally coordinated approaches to AI governance.

🧰 AI Tools

Download our list of 1000+ Tools free

Reply